Artificial intelligence has been heralded as the next great frontier in software development, a force multiplier promising to compress timelines, eliminate tedious tasks, and unlock unprecedented levels of productivity. Engineering leaders are under immense pressure to adopt these tools, driven by executive mandates to “ship faster” and the fear of being outmaneuvered by more agile competitors. The market is saturated with AI-powered coding assistants, testing platforms, and deployment tools, all claiming to revolutionize the software development life cycle (SDLC).

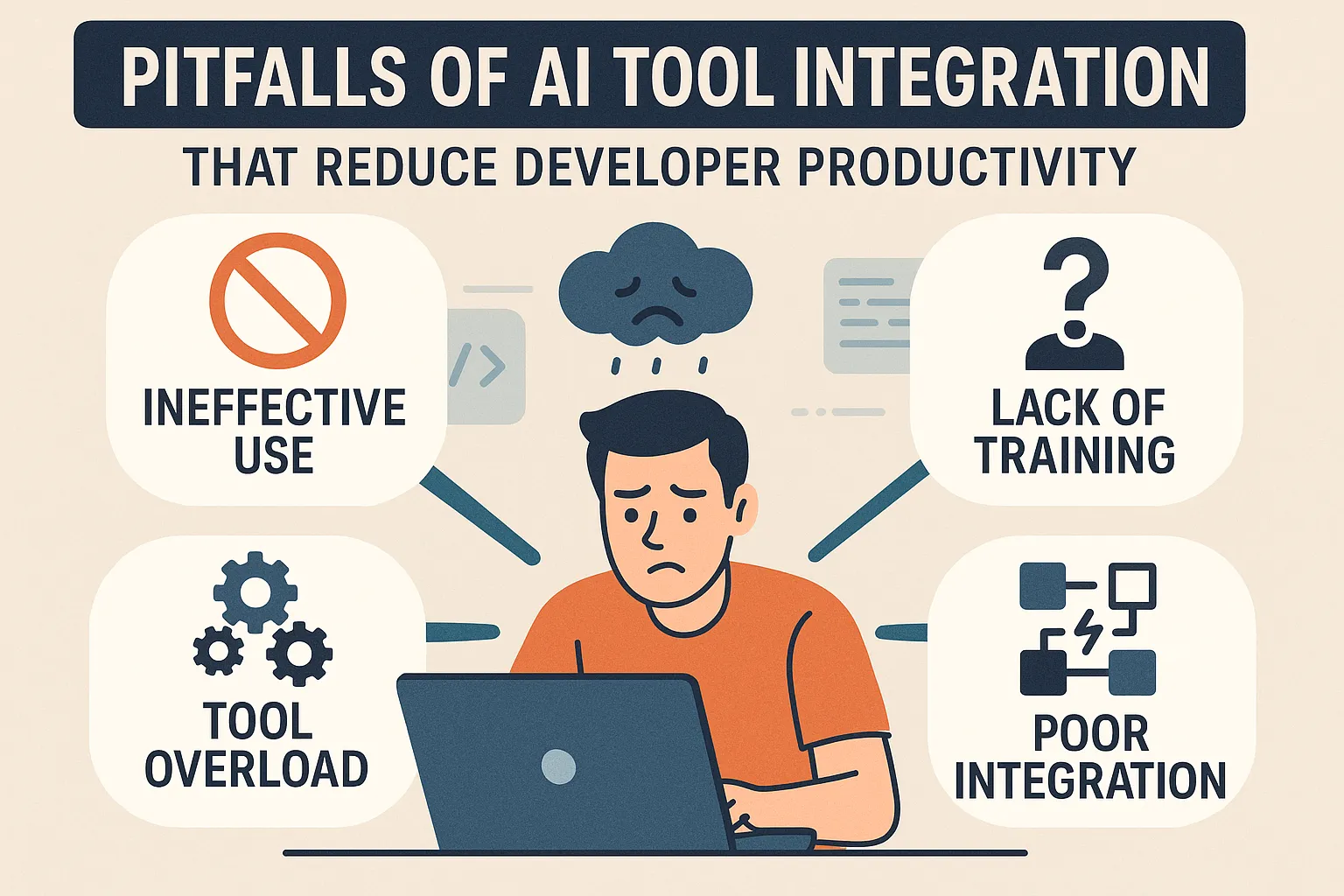

Yet, a dissonant reality is emerging from the trenches. Many development teams are finding that the integration of AI isn’t the seamless accelerator they were promised. Instead of streamlining workflows, these tools can introduce new friction points, obscure logic, and create complex debugging challenges. Developers, once optimistic, find themselves spending more time verifying, correcting, and untangling AI-generated code than it would have taken to write it from scratch. This paradox—where a tool designed for speed ultimately causes slowdowns—is a critical challenge for any organization looking to leverage AI effectively.

The problem isn’t the technology itself, but the strategy—or lack thereof—behind its adoption. Hasty, ad-hoc implementation without proper governance, vetting, or integration into existing workflows is a recipe for inefficiency. In this article, we will explore the underlying causes of AI-induced development slowdowns, from the inherent opacity of certain models to the practical challenges of managing their output. More importantly, we will outline a strategic path forward, showing how a methodical, expert-led approach can transform AI from a source of friction into a true catalyst for growth and innovation.

The Hidden Causes of AI-Induced Slowdowns

The promise of AI in development is seductive: generate complex functions in seconds, automate entire test suites, and receive instant feedback on code quality. However, when these tools are deployed without a deep understanding of their limitations, they can create more problems than they solve. The root causes of these slowdowns are often not immediately obvious, manifesting as subtle drains on productivity that accumulate over time. They stem from the fundamental nature of current generative AI technologies and the way teams interact with them.

The “Black Box” Problem: Lack of Transparency and Traceability

One of the most significant challenges with many generative AI models is their inherent opacity. They can feel like a “black box”: you provide an input, and you receive an output, but the internal logic that connects the two is often hidden from view. This lack of insight creates two critical and interrelated problems for development teams: poor transparency and a lack of traceability.

A lack of transparency into the origins of generated content means developers often cannot see the “why” behind the code. When an AI tool produces a complex algorithm or configuration script, it rarely provides a clear rationale or cites the specific documentation, libraries, or architectural patterns it referenced. This is profoundly different from a senior developer mentoring a junior one, where the explanation is as valuable as the code itself. Without this context, the developer is left with a piece of functional but mysterious logic. The immediate task may be complete, but the developer’s understanding hasn’t grown, and the team’s collective knowledge base stagnates. This becomes a significant bottleneck when the code needs to be modified, debugged, or integrated with other systems. The time saved during generation is quickly lost, and then some, during the subsequent maintenance and adaptation phases.

Compounding this issue is a lack of traceability of the root data. Generative AI models are trained on vast datasets of code, documentation, and online discussions. When a model generates output, it’s often impossible to trace it back to a specific source. This creates serious challenges for both iteration and risk management.

-

Difficulty in Updating Models: If a developer cannot trace the data sources, it becomes incredibly difficult to update or refine the AI’s behavior. Imagine an AI tool that consistently generates code using a deprecated library. Without the ability to trace why it prefers that library and retrain it on newer, better sources, the team is forced to either manually correct the output every time or abandon the tool altogether. This manual oversight negates the very efficiency the tool was meant to provide.

-

Difficulty in Scanning for Risks: The inability to trace root data is also a major security and compliance concern. Was the code snippet generated from a repository with a restrictive license? Does it contain subtle vulnerabilities inherited from its training data? Without traceability, conducting a proper risk assessment is nearly impossible. Teams are forced to treat AI-generated code with a higher degree of suspicion, requiring more intensive security reviews and static analysis, thereby slowing down the entire development pipeline.

The Hallucination Hazard and the Verification Tax

Beyond the black box problem lies another well-documented phenomenon of generative AI: hallucination. This occurs when a model generates responses that are factually incorrect, nonsensical, or simply inappropriate for the given context. It might invent a function that doesn’t exist in a library, confidently misrepresent an API’s parameters, or produce code that is syntactically correct but logically flawed in a subtle, hard-to-detect way.

For a developer, this is a significant productivity killer. Every piece of AI-generated code must be treated as potentially untrustworthy. This imposes a “verification tax” on the development process. Instead of simply accepting the generated output, a developer must engage in a multi-step process:

- Review: Carefully read and understand the generated code.

- Validate: Check if the functions, libraries, and methods it uses actually exist and are being used correctly.

- Test: Write and run unit tests to ensure the code behaves as expected under various conditions.

- Debug: If the code fails, the developer must debug logic they didn’t write, which is often more time-consuming than debugging their own.

This verification overhead can easily erode any time saved during the initial code generation. The issue is exacerbated by the insidious nature of some hallucinations. A complete fabrication is easy to spot, but a subtle logical error might pass initial code review and only be discovered later in the testing phase or, worse, in production.

To mitigate the risk of hallucinations, robust systems require two critical components:

- Source Referencing: Systems must be designed to point to specific articles, documentation, or trusted data sources. This provides the transparency and traceability needed for developers to quickly verify the information and build trust in the tool’s output.

- Human-in-the-Loop (HITL) Checking: Ultimately, there is no substitute for expert human oversight. Workflows must be designed to include a human-in-the-loop checking process, where experienced developers review and approve AI-generated contributions. While necessary for quality and safety, this adds another step to the development process that must be managed efficiently to avoid becoming a bottleneck itself.

When organizations fail to account for the black box problem and the hallucination hazard, they inevitably find their developers mired in a cycle of generating, verifying, and fixing, a process far slower than the traditional development methods they sought to replace.

The MetaCTO Approach: From Ad-Hoc Adoption to Strategic Enablement

Recognizing and understanding the pitfalls of AI integration is the first step. Overcoming them requires a deliberate, strategic approach that prioritizes stability, customizability, and a clear path toward maturity. At MetaCTO, we have over two decades of experience launching complex applications, and we’ve seen firsthand how unguided technology adoption can derail projects. Our approach is founded on the principle that AI tools must be servants to a sound engineering strategy, not the other way around. We guide our clients away from chaotic, ad-hoc experimentation and toward a structured framework that unlocks genuine, measurable productivity gains.

Building on a Proven, Scalable Foundation

The temptation to chase the newest, most-hyped AI tool is strong, but this often leads to building critical application components on unproven and volatile technology. This is a direct path to the productivity traps of opacity and unreliability. Our philosophy is different. MetaCTO’s tech stack, which includes AI, is built on proven tools and technologies that power fast, scalable apps. We don’t just adopt; we curate. Every tool and technology we integrate, especially in the AI space, is rigorously vetted for several key criteria:

- Maturity and Stability: Is the technology well-supported, with a history of reliability in production environments?

- Transparency: Does it offer sufficient insight into its operations to allow for effective debugging and maintenance?

- Scalability: Can it handle the demands of a growing user base without requiring a complete architectural overhaul?

- Community and Support: Is there a robust community and commercial support system to help resolve issues quickly?

By building on this proven foundation, we help our clients sidestep the “black box” problem. The AI components we integrate are chosen not just for their generative capabilities but for their predictability and maintainability. This ensures that when a developer uses an AI-assisted tool in our stack, the output is more reliable, easier to understand, and less likely to introduce obscure dependencies or hidden risks. This foundational stability is the first line of defense against AI-induced slowdowns.

Customizing the Tech Stack for Today’s Challenges and Tomorrow’s Growth

A proven tech stack is the starting point, not the final destination. Every application has unique requirements, constraints, and growth trajectories. A one-size-fits-all approach to AI integration is doomed to fail because it cannot account for this specific context. This is why we customize our tech stack, which includes AI, to meet specific requirements, ensuring the app is ready to handle today’s challenges and tomorrow’s growth.

This customization is where we directly address the hallucination hazard and the need for rigorous verification. For a project handling sensitive data, for instance, we might configure AI tools to draw only from a private, vetted knowledge base, drastically reducing the risk of hallucinations and ensuring compliance. We design and implement the necessary guardrails and workflows from day one, such as:

- Automated Source Checking: Integrating systems that require AI-generated code to be accompanied by citations to approved documentation.

- Structured Human-in-the-Loop Reviews: Building HITL processes directly into the CI/CD pipeline, ensuring that every AI contribution is validated by an expert without creating unnecessary delays.

- Context-Aware Configurations: Fine-tuning AI models and assistants to understand the specific architecture, coding standards, and business logic of the client’s application, making their suggestions far more relevant and accurate.

This bespoke approach ensures that the AI toolkit is perfectly aligned with the project’s needs, transforming it from a generic, unpredictable assistant into a highly specialized and trusted co-pilot for the development team.

Escaping “Experimental” Chaos with the AI-Enabled Engineering Maturity Index (AEMI)

Perhaps the most common reason AI slows teams down is that they get stuck in the early, chaotic stages of adoption. In our experience, most organizations are at Level 1 (Reactive) or Level 2 (Experimental) of AI maturity. At this stage, developers use a fragmented collection of free and paid tools with no oversight, no standardized best practices, and no way to measure impact. This is where you hear complaints like, “AI is making me slower… I’m just fixing bad code!”

To solve this, we developed the AI-Enabled Engineering Maturity Index (AEMI), a strategic framework for assessing and advancing a team’s AI capabilities. The AEMI provides a clear roadmap to move from chaos to competence. It helps organizations transition from the inefficient Experimental stage to Level 3 (Intentional), where the real productivity gains begin.

Moving to Level 3 involves:

- Standardizing Tools: Officially adopting and providing training for a curated set of AI tools from a proven tech stack.

- Establishing Governance: Creating formal policies and guidelines for how AI should be used in development, code review, and testing.

- Measuring Impact: Implementing metrics to track the effect of AI adoption on key performance indicators like PR cycle time, deployment frequency, and code quality.

By using the AEMI framework, we provide clients with a structured path to AI adoption that avoids the common pitfalls. It transforms the conversation from “Are we using AI?” to “How can we use AI strategically to achieve specific, measurable business outcomes?” This methodical progression is the key to unlocking the true potential of artificial intelligence in software development.

How an Expert Agency Accelerates AI Development

Navigating the complexities of AI adoption while simultaneously managing the daily pressures of software delivery is an immense challenge for any in-house team. The learning curve is steep, the landscape of tools is constantly shifting, and a single strategic misstep can lead to months of wasted effort and frustrated developers. This is where partnering with a specialized agency like MetaCTO provides a decisive advantage. We act as a strategic accelerator, enabling your organization to bypass the costly and time-consuming trial-and-error phase of AI adoption.

Our value is rooted in a combination of deep experience, proven frameworks, and an unwavering focus on delivering measurable results. With over 20 years of app development experience and more than 100 successful projects delivered, we have cultivated a deep understanding of what it takes to build and scale robust, market-ready applications. We apply this same rigorous, experience-driven approach to AI integration.

-

Vetted Technology and Experience: We have already done the legwork of evaluating countless AI tools. We know which ones deliver on their promises and which are merely hype. Our clients benefit from this accumulated knowledge, gaining immediate access to a curated, proven tech stack that is optimized for performance and maintainability. This saves you from investing precious time and resources into tools that will ultimately slow your team down.

-

Strategic Frameworks for Predictable Success: We don’t just provide developers; we provide a strategy. Frameworks like our AI-Enabled Engineering Maturity Index (AEMI) offer a clear, actionable roadmap for AI adoption. We work with you to assess your current maturity level, identify critical gaps, and build a tailored plan to advance your capabilities systematically. This turns the vague executive mandate to “use AI” into a concrete project plan with defined milestones and KPIs, ensuring that every step taken is a step toward greater efficiency.

-

A Data-Driven Focus on ROI: Proving the value of AI investments is a common struggle. We help you move beyond anecdotal evidence to hard data. By leveraging insights from industry-wide studies like our 2025 AI-Enablement Benchmark Report, we can help you set realistic goals and establish the right metrics to track your return on investment. We help you answer the critical questions: How is this tool affecting our deployment frequency? How has it impacted our code review times? This focus on measurement ensures that your AI strategy is not only technically sound but also financially justifiable.

-

Proactive Mitigation of Common Pitfalls: Our development process is designed to proactively address the core causes of AI-induced slowdowns. We build systems with traceability, source referencing, and hallucination-mitigation in mind from the very beginning. An in-house team venturing into AI for the first time might learn these lessons the hard way, after months of painful debugging and refactoring. With MetaCTO, these best practices are baked into the development lifecycle from day one, ensuring your project is built correctly and efficiently.

Partnering with an expert agency is about de-risking your investment in AI. It’s about leveraging specialized expertise to achieve in months what might take an in-house team years to accomplish. We provide the strategy, the tools, and the experienced hands to ensure your journey into AI-enabled development is a successful and accelerated one.

Conclusion

The promise of AI to revolutionize software development is real, but it is not automatic. The path to unlocking its potential is littered with potential pitfalls that can, paradoxically, make teams slower, not faster. The unmanaged adoption of AI tools frequently leads to productivity drains stemming from the “black box” nature of models, which lack transparency and traceability, making code difficult to maintain and update. Furthermore, the persistent risk of AI “hallucinations” imposes a significant verification tax on developers, forcing them to spend precious time validating and debugging code they did not write.

Overcoming these challenges requires a shift from haphazard experimentation to a strategic, intentional approach. It demands building upon a foundation of proven, scalable technologies rather than chasing fleeting trends. It requires customizing the AI toolchain to fit the unique needs of each project and implementing robust guardrails and human-in-the-loop processes. Most importantly, it necessitates a clear roadmap for advancing organizational maturity, moving from a reactive stance to a truly AI-first culture.

At MetaCTO, we provide this strategic guidance. Our approach, built on over two decades of experience, combines a vetted technology stack with bespoke customization to solve today’s challenges and prepare for tomorrow’s growth. With frameworks like the AI-Enabled Engineering Maturity Index (AEMI), we provide the clarity and direction needed to transform your AI adoption from a source of friction into a powerful engine for innovation and speed.

If your team is struggling to realize the promised productivity gains from AI, or if you feel the pressure to adopt AI without a clear plan, it’s time to talk to an expert. Talk with an AI app development expert at MetaCTO to discuss how we can help you navigate these challenges and build a truly AI-enabled engineering team that delivers results.