The Engineering Leader’s Dilemma: Navigating the AI Mandate

As an engineering leader, you are at the epicenter of a seismic shift. The C-suite and board are looking at competitors, reading headlines, and asking the inevitable question: “Why aren’t we shipping faster with AI?” The pressure is immense. You’re expected to drive innovation, boost productivity by 40% or more, and integrate artificial intelligence across the entire software development lifecycle (SDLC).

Meanwhile, your team is on the front lines, navigating a chaotic landscape of new tools and workflows. One developer is experimenting with a new AI coding assistant, while another finds it slows them down by generating buggy code. There’s no standard, no governance, and most importantly, no clear way to measure the return on investment. This isn’t just your reality; it’s an industry-wide challenge. According to Forrester, 67% of engineering leaders feel intense pressure to adopt AI, yet a McKinsey report reveals that only about 1% consider their organizations fully AI-mature.

This chasm between expectation and reality creates a perfect storm of “Fear of Missing Out” (FOMO) driving hasty decisions, unclear ROI making budget justification impossible, and the very real risk of being outpaced by more agile competitors.

To cut through the noise and transform chaotic experimentation into a strategic advantage, you need a compass. You need a way to measure where you are, where you need to go, and how to get there. You need to benchmark your team’s AI capabilities. This comprehensive guide will walk you through a structured approach to assessing your team’s AI maturity, using frameworks like our AI-Enabled Engineering Maturity Index to build a clear, actionable roadmap for transformative growth.

What is AI-Enabled Engineering Benchmarking?

Benchmarking your AI capabilities is far more than a simple audit of which AI tools your developers are using. It is a holistic and continuous process of measuring your team’s performance against industry best practices and top performers. It provides an objective, data-driven view of your strengths and weaknesses across people, processes, and technology.

A proper benchmark doesn’t just ask, “Are we using AI?” It asks:

- Where in the SDLC are we leveraging AI effectively?

- How does our investment in AI tools compare to our peers?

- What is the measurable impact on our productivity, quality, and speed?

To answer these questions, you must assess your capabilities across the entire software development lifecycle. At MetaCTO, through our work with over 100 engineering teams, we’ve identified eight critical phases where AI can deliver substantial gains. Our 2025 AI-Enablement Benchmark Report analyzes adoption and impact across these very stages.

The 8 Key Phases of AI-Enabled Engineering

- Planning & Requirements: Using AI to analyze user feedback, generate user stories, and refine project scope.

- Design & Architecture: Leveraging AI for system design suggestions, creating diagrams, and exploring architectural patterns.

- Development & Coding: The most common starting point, with AI assistants generating code, writing unit tests, and debugging.

- Code Review & Collaboration: Employing AI tools to automatically review pull requests, suggest improvements, and summarize changes.

- Testing: Automating the generation of test cases, identifying edge cases, and analyzing test results for patterns.

- CI/CD & Deployment: Using AI to optimize build pipelines, predict deployment failures, and automate release notes.

- Monitoring & Observability: Applying AI to detect anomalies in real-time, predict potential outages, and accelerate root cause analysis.

- Communication & Documentation: Leveraging AI to automatically generate documentation, summarize meetings, and improve internal communication.

By evaluating your team’s proficiency in each of these areas, you move beyond anecdotal evidence and begin to build a complete picture of your AI maturity. This allows you to identify not just pockets of excellence, but critical gaps that are holding you back.

A Framework for Growth: The AI-Enabled Engineering Maturity Index (AEMI)

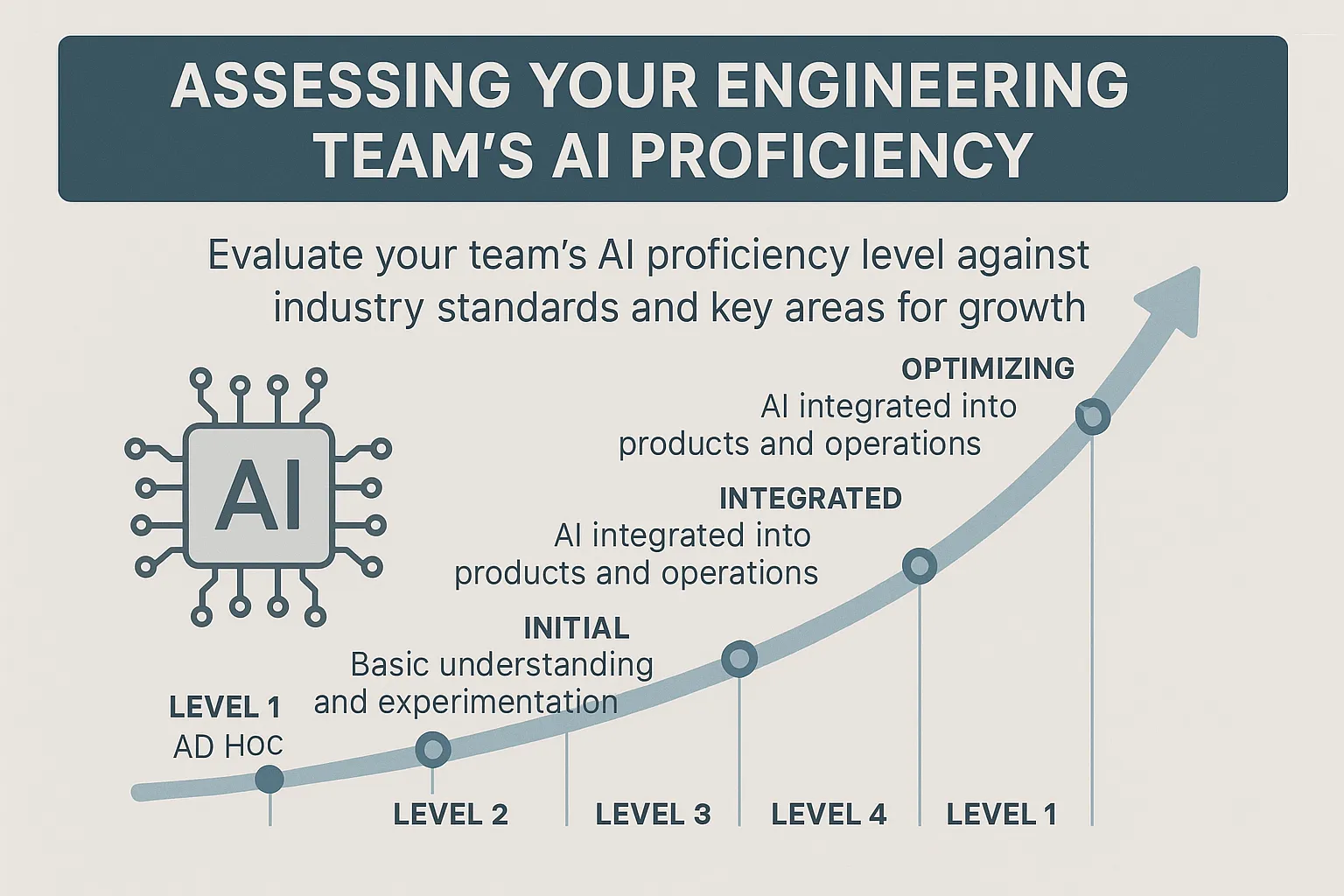

Understanding the “what” and “where” of benchmarking is the first step. The next is having a framework to interpret your findings and guide your strategy. To provide this clarity, we developed the AI-Enabled Engineering Maturity Index (AEMI). The AEMI is a five-tier model that provides a standardized benchmark for evaluating your team’s AI adoption, identifying gaps, and creating a clear roadmap to advance to the next level.

Each level represents a distinct stage of AI adoption, with specific characteristics related to awareness, tooling, governance, and productivity impact.

Level 1: Reactive

At this initial stage, there is minimal or no organizational awareness of AI’s potential in engineering. Any use is ad-hoc, driven by individual developers experimenting with free tools like ChatGPT. There are no policies, no governance, and no measurement of impact.

- Risk Profile: High. The organization is at significant risk of being outmaneuvered by competitors who are even moderately more advanced in their AI adoption. Productivity remains stagnant.

Level 2: Experimental

Awareness is growing, but it’s siloed. Small groups or individual “champions” are exploring AI tools, particularly coding assistants. You might see some anecdotal productivity gains, but these are inconsistent and unmeasured. Guidelines are just beginning to emerge, often in the form of shared best practices in a Slack channel, but there are no formal standards.

- Risk Profile: Moderate-High. While there are pockets of progress, the lack of a unified strategy leads to inconsistent results, potential security risks from ungoverned tool usage, and wasted effort.

Level 3: Intentional

This is a critical turning point. The organization makes a conscious decision to adopt AI strategically. There is team-wide awareness, often supported by formal training. The company invests in official, enterprise-grade AI tools. Most importantly, formal policies and governance are established for AI usage, particularly in code generation and review. At this level, you can begin to see measurable improvements in key metrics like pull request cycle time and deployment frequency.

- Risk Profile: Moderate. Reaching Level 3 puts you ahead of the vast majority of organizations today. You have a solid foundation and are keeping pace with the competition.

Level 4: Strategic

AI is no longer just a tool; it’s a core part of the engineering culture. AI fluency is high across the team, and its use is integrated broadly across most of the SDLC—from planning and coding to testing and security. Governance is mature, with regular reviews and proactive updates to policies. The impact is substantial, with engineering teams often achieving 50%+ gains in code integration and delivery speed.

- Risk Profile: Low. Your organization has a strong competitive edge and is often setting the pace for others in your industry.

Level 5: AI-First

This is the pinnacle of AI maturity. The engineering culture is fundamentally AI-driven. The team is not just using AI tools but is actively engaged in continuous upskilling and the adoption of cutting-edge practices. AI is ubiquitous, driving ML-powered optimizations, automated code refactoring, and real-time analytics. Governance is dynamic, using AI insights to adapt and optimize processes continuously.

- Risk Profile: Minimal. Your organization is at the forefront of innovation with significant, defensible competitive differentiation.

Summary of AEMI Levels

| Level | Stage Name | AI Awareness | AI Tooling & Usage | Process Maturity | Productivity Impact | Risk Exposure |

|---|---|---|---|---|---|---|

| 1 | Reactive | Minimal or none | Ad hoc, individual use | None (no governance) | Negligible | High (falling behind) |

| 2 | Experimental | Basic exploration | Early adoption (siloed) | Emerging guidelines | Informal | Moderate-High |

| 3 | Intentional | Good, team-wide | Defined use (coding + tests) | Formalized policies | Measurable gains | Moderate |

| 4 | Strategic | High, integrated | Broad adoption across SDLC | Mature governance | Substantial | Low |

| 5 | AI-First | AI-first culture | Deep, AI-driven workflows | Dynamic optimization | Industry-leading | Minimal |

This framework transforms the vague mandate to “use AI” into a concrete set of goals. It helps you justify investments, mitigate risks, and measure the real-world impact of your AI strategy on engineering performance.

How to Conduct Your Own AI Capability Benchmark

Using the AEMI framework, you can perform a systematic assessment of your team’s capabilities. This process is not a one-time event but a cycle of assessment, planning, and iteration.

Step 1: Assess Your Current State

The first step is to get an honest baseline of where you stand today. This involves both qualitative and quantitative data collection.

- Survey Your Team: Anonymously survey your engineers and managers. Ask about their current AI tool usage, their perceived productivity impact, their confidence in using AI, and the challenges they face.

- Analyze Tool Usage: If possible, gather data on which AI tools are being used. Are they sanctioned, enterprise tools or a patchwork of free, individual accounts?

- Review Processes: Examine your existing engineering playbooks. Is there any mention of AI? Are there guidelines for its use in code review or testing?

- Determine Your AEMI Level: Based on this information, map your organization to one of the five AEMI levels. Be honest. Most organizations today fall into Level 1 or 2.

Step 2: Identify Critical Gaps

With your baseline established, compare your current state to the criteria for the next maturity level. This will reveal your most critical gaps.

- If you are Level 1 (Reactive), your primary gap is the lack of any formal strategy or awareness.

- If you are Level 2 (Experimental), your gaps are likely in standardization, governance, and measurement. You need to move from ad-hoc usage to official tool adoption and formal policies.

- If you are Level 3 (Intentional), your gaps may be in expanding AI usage beyond just coding into other areas of the SDLC like testing, CI/CD, and monitoring.

Step 3: Build an Actionable Roadmap

Your gap analysis forms the basis of your roadmap. Prioritize initiatives that will have the highest impact on moving you to the next level. A sample 6-month journey from Level 2 to Level 4 might look like this:

- Months 0-2 (Achieving Level 3):

- Establish formal AI usage guidelines and a governance committee.

- Select and deploy a standardized, enterprise-grade AI coding assistant for all developers.

- Conduct formal training sessions and establish a baseline for key metrics (e.g., cycle time).

- Months 3-4 (Progressing toward Level 4):

- Pilot AI-powered tools in a new area, such as automated testing or code review.

- Measure the impact (e.g., hours saved per week, reduction in review time) and build a business case for wider adoption.

- Months 5-6 (Reaching Level 4):

- Integrate AI into another critical area, like security scanning or deployment monitoring.

- Demonstrate a significant, measurable improvement (e.g., 50% faster deployment cycles) and communicate the success to stakeholders.

Step 4: Pilot, Measure, and Prove Value

Avoid a big-bang rollout. Start with a pilot team that is enthusiastic about AI. This allows you to test your strategy, work out the kinks, and gather data on the impact. Track clear metrics before and after the pilot:

- Velocity: Pull request cycle time, deployment frequency.

- Quality: Number of production bugs, change failure rate.

- Adoption: Percentage of developers actively using the new tools and workflows.

Use this data to prove the value of your initiatives and build momentum for a broader rollout.

Step 5: Scale and Iterate

Once a pilot has proven successful, scale the initiative across the wider engineering organization. However, the process doesn’t end there. The AI landscape is evolving at an incredible pace. Continuously reassess your maturity, look for new opportunities, and refine your roadmap. Benchmarking is a journey of continuous improvement.

The Role of an AI Development Partner in Accelerating Your Journey

Navigating the path from a reactive to an AI-first engineering culture is a formidable challenge. It requires specialized knowledge, significant resources, and a focused strategy. This is where partnering with a specialist AI development agency like MetaCTO can be a powerful accelerator. Engaging with an external firm provides numerous benefits that can help you leapfrog maturity levels more efficiently and effectively.

Immediate Access to Elite Expertise

Building an in-house team with deep AI expertise is a slow and expensive process. Partnering with a firm like ours offers immediate entry points into elite-level knowledge without the enduring costs of sourcing specialized staff and funding ongoing training. We bring a wealth of expertise to the table, helping you navigate the complexities of AI adoption and ensure successful implementation. Our teams of AI experts contribute extensive experience and sophisticated insights, ensuring that the custom-crafted AI technologies we develop are not only at the forefront but also specifically aligned with your distinctive business requirements.

Accelerated Timelines and Rapid Implementation

Time is a critical competitive factor. Drawing upon the proficiency offered by seasoned AI experts can significantly shorten your product-to-market timelines. External AI development companies often come equipped with pre-developed, fine-tuned models and established best practices that facilitate the rapid implementation of AI solutions. This allows your business to gain a strategic advantage over competitors within your sector.

Strategic Focus and Increased Productivity

By offloading the complexities of AI implementation, your internal team is free to focus on its core business objectives. This collaboration boosts overall productivity. Partnering with AI consultants allows you to streamline operations and enhance efficiency. We provide the strategic guidance needed for complex goals like optimizing supply chains, enhancing customer experiences, or developing predictive analytics, allowing your team to execute on your core mission.

Scalable, Flexible, and Customized Solutions

As your business grows, your AI needs will evolve. AI development firms provide scalable solutions that are crucial for accommodating this growth. We frequently deliver cloud-based AI solutions that can adjust to expanding business requirements. This scalability ensures that you can start with small implementations and gradually expand your AI capabilities without a significant upfront investment. Furthermore, a dedicated AI partner can customize models and provide tailored solutions that directly address your company’s specific challenges, making the AI integration both effective and seamless.

Cost-Effectiveness

While it may seem counterintuitive, partnering with an AI development company can lead to considerable cost reductions. It helps you save costs by avoiding the immense expense and time required to build and maintain an internal AI team. Cloud-based solutions may also be more cost-effective in the short term, and the ability to scale without massive capital investment provides significant financial flexibility.

Navigating Compliance and Ethical Considerations

The adoption of AI comes with a complex web of regulatory and ethical challenges. An experienced partner is crucial for navigating this landscape. Our consulting services provide comprehensive support for ensuring compliance and security in AI development. We guide organizations through the complexities of regulations like GDPR, CCPA/CPRA, and HIPAA. Moreover, our consultants emphasize adherence to ethical guidelines, promoting responsible development that helps preserve confidence in artificial intelligence among your users and stakeholders.

Conclusion: From Reactive to Revolutionary

The pressure to integrate AI into your engineering practices is not going away. It is the new baseline for competitive advantage. The difference between the winners and losers will be determined not by whether they adopt AI, but by how. A chaotic, reactive approach will lead to wasted resources, frustrated teams, and missed opportunities. A strategic, benchmark-driven approach will unlock unprecedented levels of productivity, innovation, and market leadership.

This guide has provided a comprehensive roadmap for this journey. We’ve explored the importance of benchmarking across the entire SDLC, introduced the AI-Enabled Engineering Maturity Index (AEMI) as a powerful framework for self-assessment, and outlined a five-step process for building a roadmap to advance your capabilities. We’ve also highlighted how a dedicated AI partner can act as a powerful catalyst, providing the expertise, resources, and strategic guidance to accelerate your transformation.

The path from Level 1 to Level 5 is a challenging one, but it is also one of the most rewarding investments you can make in your team and your company’s future. You don’t have to walk it alone.

Ready to move from reactive AI adoption to a strategic, AI-first culture? Talk with an AI app development expert at MetaCTO today. We can help you assess your current maturity, build a clear roadmap, and implement the solutions that drive real results.