Introduction

In the age of Big Data, businesses are drowning in information. The ability to process, analyze, and derive insights from massive datasets is no longer a luxury but a competitive necessity. This is where Apache Hadoop enters the picture—a powerful open-source framework designed to handle petabytes of data across clusters of commodity hardware. However, building an application on this powerful framework is a formidable challenge. The inherent complexity of Hadoop’s distributed architecture, coupled with the need for specialized expertise, makes in-house Hadoop app development a path fraught with obstacles.

Many organizations underestimate the technical depth required to not only set up a Hadoop cluster but to build a robust, scalable, and efficient application on top of it. From managing distributed file systems to optimizing parallel processing jobs, the journey is resource-intensive and requires a rare skill set.

This article serves as a comprehensive guide to Hadoop app development. We will demystify the technology by breaking down its core architecture, explore the significant reasons why developing a Hadoop application in-house is so difficult, and discuss the landscape of development partners who can help. As a top US AI-powered mobile app development firm, we at MetaCTO have over 20 years of experience turning complex technological concepts into successful, market-ready products. We understand the intricacies of integrating powerful backend systems like Hadoop into seamless user applications, and we will share our insights on how to navigate this process successfully.

What is a Hadoop Application?

At its core, a Hadoop application is a piece of software that leverages the Hadoop framework to store, process, and analyze Big Data. It isn’t a standalone program in the traditional sense; rather, it’s a system that runs on top of Hadoop’s distributed ecosystem. Hadoop, written in Java, provides the foundational layers for data storage and processing, allowing applications to perform complex computations on vast datasets in a parallel and distributed manner.

To truly understand what a Hadoop application does, one must first grasp the architecture it relies on. The Hadoop framework is primarily composed of four essential components, each with a distinct and critical role.

Hadoop’s Core Architectural Components

The power and complexity of Hadoop lie in its modular architecture. An application built for this ecosystem must interact seamlessly with these four pillars:

- HDFS (Hadoop Distributed File System): The storage layer.

- MapReduce: The original processing model.

- YARN (Yet Another Resource Negotiator): The cluster resource management layer.

- Hadoop Common: The set of common utilities and libraries that support the other components.

Let’s explore each of these in detail.

HDFS: The Foundation for Big Data Storage

HDFS is a distributed file system designed specifically to run on large clusters of inexpensive, commodity hardware. It is the bedrock of Hadoop’s storage capabilities, providing a reliable and scalable home for enormous datasets.

- Distributed Design and Large Blocks: HDFS is architected to store data across many machines. It is designed with the philosophy of storing data in large chunks, known as blocks. By default, each block is 128MB in size, a value that can be configured. When a large file is ingested into HDFS, Hadoop doesn’t interpret the content; it simply splits the file into these 128MB blocks and distributes them across the various nodes in the cluster. This approach, using much larger block sizes than traditional file systems, is key to its efficiency. It reduces metadata overhead and minimizes I/O operations, improving storage scalability and the speed of data processing for large files.

- Fault Tolerance and High Availability: HDFS is built for resilience. It assumes that hardware failure is not an exception but a common occurrence in a large cluster. To guard against data loss, it employs a replication mechanism. By default, every data block is copied and stored on three different machines (a replication factor of 3). If one node fails, the data is still available from the other replicas. This ensures high availability and prevents data loss, which is critical for business operations.

- Architecture: NameNode and DataNode: HDFS operates on a master-slave architecture.

- NameNode (Master): This is the centerpiece of the HDFS cluster. The NameNode doesn’t store the actual data itself. Instead, it manages the file system’s namespace and maintains the metadata for all files and directories. This metadata includes file names, sizes, permissions, and, most importantly, the location of each data block across the DataNodes. It controls file operations like creating, deleting, and replicating blocks, directing the DataNodes to perform these tasks.

- DataNode (Slave): These are the workhorses of HDFS. DataNodes store the actual data blocks on their local disks and are responsible for handling read and write requests from clients. They regularly communicate with the NameNode, sending “heartbeats” to confirm they are alive and “block reports” to list the blocks they are storing. The more DataNodes in a cluster, the more storage capacity and better the performance.

- Rack Awareness: To optimize network traffic and improve performance, HDFS can be made “rack-aware.” The NameNode uses this information about the cluster’s network topology to place replicas intelligently. For instance, it might place one replica on a node in one rack and the other two replicas on nodes in a different rack. This strategy ensures that even if an entire rack fails (e.g., due to a power outage or network switch failure), the data remains accessible. It also helps in reducing inter-rack data transfer, which is typically slower than intra-rack communication, thereby improving read/write performance.

MapReduce: The Parallel Processing Engine

MapReduce is the programming model and processing engine that made Hadoop famous. Built to run on top of the YARN framework, its main feature is the ability to perform distributed and parallel processing of enormous datasets across the entire cluster, which significantly boosts performance. MapReduce splits a large processing workload into two distinct phases: Map and Reduce.

-

The MapReduce Workflow:

- Input: The process begins with input data, which is typically stored in HDFS.

- Map Phase: The input data is read and broken down into key-value pairs. A user-defined function, the Mapper, processes each of these pairs and may output zero or more new key-value pairs.

- Reduce Phase: The intermediate key-value pairs generated by the Mappers are shuffled, sorted, and then passed to the Reducer. The Reducer function aggregates these pairs based on their keys and performs the required operations, such as counting, summing, or sorting, to produce the final output. The core processing logic typically resides here.

- Output: The final result is written back to HDFS.

-

Map Task Components:

- RecordReader: Reads the raw input data from HDFS and converts it into key-value pairs suitable for the Mapper. The key often represents location metadata, while the value contains the actual data chunk.

- Mapper: The user-defined function that contains the core logic for processing each input pair.

- Combiner (Optional): An optional optimization step. It acts as a “mini-reducer” on the local node where the Map task ran. It summarizes or groups the Mapper’s output before it’s sent over the network to the Reducer, significantly reducing data transfer overhead.

- Partitioner: Determines which Reducer will receive a particular key-value pair from the Mapper. It typically uses a hash function (

key.hashCode() % numberOfReducers) to distribute the data evenly across the available Reducers.

-

Reduce Task Components:

- Shuffle and Sort: This is the process where the intermediate key-value pairs from all Mappers are transferred (shuffled) to the appropriate Reducers and then sorted by key. This process begins as soon as the first Mappers finish their tasks, speeding up the overall job.

- Reducer: The user-defined function that receives the grouped and sorted key-value pairs. It performs the final aggregation or filtering based on the business logic.

- OutputFormat: The final output is written to HDFS using a RecordWriter. Each line typically represents a single record, with the key and value separated by a space.

YARN: The Modern Resource Manager

As the Hadoop ecosystem evolved, the resource management and job scheduling functions originally tied to MapReduce proved to be a bottleneck. YARN (Yet Another Resource Negotiator) was introduced to decouple these responsibilities, making Hadoop a more versatile and efficient multi-application platform.

- Core Responsibilities: YARN handles two primary duties:

- Job Scheduling: It breaks down large tasks into smaller jobs and assigns them to various nodes in the cluster. It manages job priorities, dependencies, and execution progress.

- Resource Management: It allocates and manages the cluster’s resources (CPU, memory, etc.) required for running these jobs, ensuring that resources are used efficiently.

- Key Features:

- Multi-Tenancy: YARN allows multiple data processing engines—like MapReduce, Apache Spark, and others—to run concurrently on the same cluster, sharing resources.

- Scalability: It is designed to scale and manage thousands of nodes and jobs simultaneously.

- Better Cluster Utilization: By dynamically managing resources, YARN maximizes their usage across the cluster, preventing idle capacity.

- Compatibility: YARN is fully compatible with existing applications written for the original MapReduce framework.

Hadoop Common: The Supporting Libraries

Hadoop Common, also known as Common Utilities, is the glue that holds the ecosystem together. It comprises the essential Java libraries and scripts required by all other Hadoop components. It provides critical functionalities such as:

- File system and I/O operations.

- Configuration and logging.

- Security and authentication.

- Network communication.

Hadoop Common ensures that the entire cluster works cohesively and that hardware failures, which are expected in a commodity hardware environment, are handled automatically at the software level.

Reasons It Is Difficult to Develop a Hadoop App In-House

While the power of Hadoop is undeniable, harnessing it is far from simple. Building a Hadoop application in-house presents a steep learning curve and a host of operational challenges that can derail projects and inflate budgets. These difficulties span technical complexity, resource management, performance tuning, and talent acquisition. Partnering with an expert team that can provide strategic guidance, like a Fractional CTO, is often the most effective path forward.

Deep Architectural Complexity and Required Expertise

The single greatest barrier to in-house Hadoop development is its sheer complexity.

- Intricate Management: Simply managing a Hadoop cluster is a full-time job. It requires deep expertise in cluster configuration to set up the network of nodes correctly, ongoing maintenance to keep it running smoothly, and continuous optimization to ensure it performs under load.

- Deep Understanding Needed: A developer cannot simply “use” Hadoop without a profound understanding of its underlying architecture. They must know how HDFS distributes data, how YARN allocates resources, and how to write efficient MapReduce (or Spark) jobs that leverage the parallel nature of the system. A lack of this deep knowledge leads to inefficient applications that fail to deliver on the promise of Big Data processing.

Resource-Intensive Operations

Hadoop is not a lightweight framework.

- Setup and Maintenance: The initial setup of a multi-node cluster can be incredibly resource-intensive, both in terms of hardware costs and the man-hours required for configuration. Maintenance is an ongoing drain on resources, involving monitoring, patching, and troubleshooting across dozens or even hundreds of machines.

- Storage Overhead: HDFS achieves data reliability through replication, which, by default, triples the amount of storage space required for any given dataset. As data volumes grow into the terabytes and petabytes, this replication overhead can become prohibitively inefficient and costly for an organization to manage on its own.

Performance and Latency Limitations

Despite its power for batch processing, Hadoop has limitations.

- Not for Real-Time: Hadoop, particularly the classic MapReduce framework, is not optimized for real-time data processing. It’s designed for high-throughput batch jobs that can take minutes or hours to run.

- Significant Latency: The latency inherent in MapReduce job startup and execution can be a significant drawback for applications that require near real-time analysis. This makes it a poor choice for use cases like interactive querying or live dashboards without significant architectural augmentation.

Inherent Security Vulnerabilities

Security is not a built-in feature of Hadoop.

- Lacking Default Security: By default, Hadoop lacks robust security measures. It does not include encryption for data at rest (on HDFS) or data in transit (across the network). This makes sensitive data highly vulnerable in a standard installation.

- Complexity of Integration: Adding necessary security features like encryption, authentication (e.g., Kerberos), and authorization involves integrating additional, often complex, tools into the ecosystem. This complicates the system further and requires specialized security expertise.

Diminishing Returns on Scalability

While Hadoop is built to scale, it’s not always a simple, linear process.

- Non-Linear Performance: Simply adding more nodes to a cluster does not always lead to a proportional improvement in performance.

- Overhead and Congestion: As a cluster grows, the management overhead required to keep it running increases. Furthermore, network congestion can become a major bottleneck, diminishing the benefits of adding more hardware.

Talent Scarcity and Cost

The expertise required to successfully manage and develop for Hadoop is both rare and expensive.

- High Demand for Professionals: The demand for professionals skilled in Hadoop, from administrators to data engineers, is consistently high.

- Finding and Retaining Talent: Finding personnel with the necessary expertise is a major challenge for many companies. Once found, retaining them can be very expensive, as they command high salaries in a competitive market. This makes building and sustaining an in-house team a significant financial commitment.

Cost Estimate for Developing a Hadoop App

Determining a precise cost for developing a Hadoop application is nearly impossible without a detailed project scope. The final price tag depends on a multitude of factors, including the complexity of the data processing logic, the scale of the data, the size of the development team, and the level of ongoing support required.

The facts suggest that building scalable Hadoop solutions can be done at an affordable price, particularly when hiring dedicated developers. However, this approach often hides the total cost of ownership. Hiring individual developers still leaves the burden of architectural design, project management, quality assurance, and ongoing cluster maintenance on your in-house team—if you even have one with the requisite skills.

A more predictable and value-driven approach is to partner with a full-service development agency. At MetaCTO, we focus on delivering a clear return on investment. Instead of just providing developers, we provide a complete solution. Our Rapid MVP Development service, for example, is designed to launch a functional product in just 90 days. This allows you to validate your idea, test it with real users, and secure stakeholder buy-in on a controlled budget and timeline, dramatically reducing the financial risk associated with a large-scale, open-ended development project.

Top Hadoop App Development Companies

Choosing the right development partner is the most critical decision you will make. You need a team that not only understands the backend complexities of Hadoop but also knows how to build a user-centric application that solves a real-world problem.

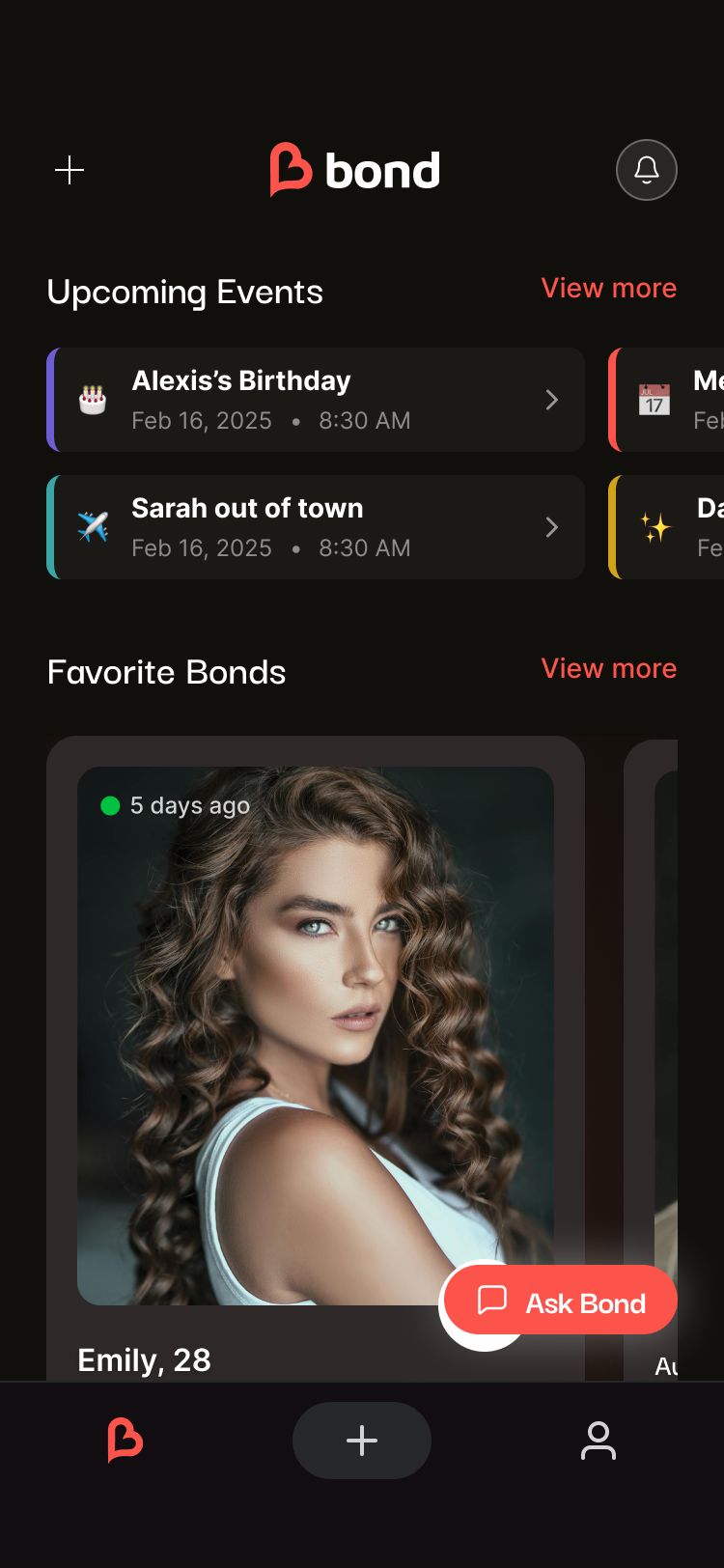

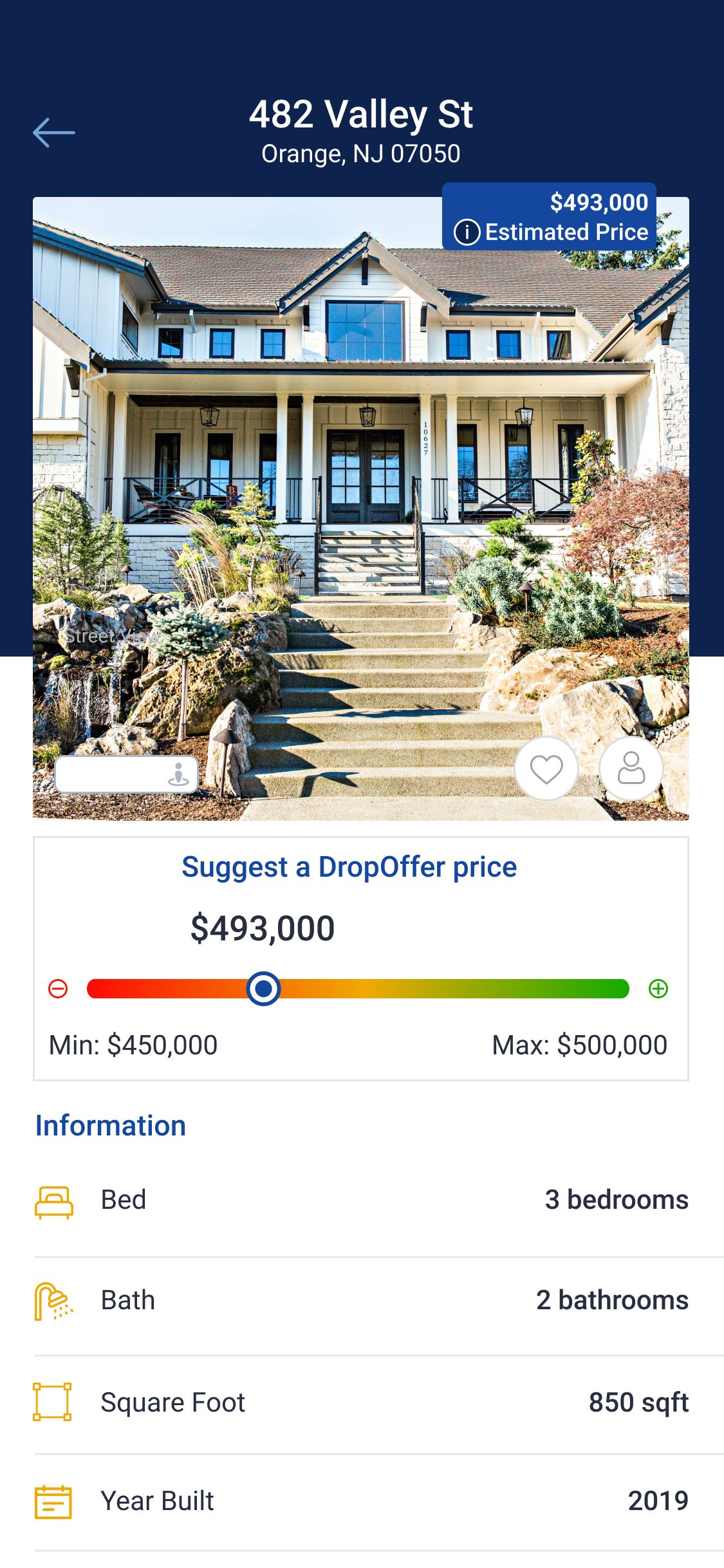

1. MetaCTO

At MetaCTO, we stand at the intersection of powerful backend technology and world-class mobile app development. We believe that the true value of Big Data is only realized when it can be accessed and utilized through intuitive, high-performance applications. With over 120 successful projects launched and more than $40 million in fundraising support for our clients, we have a proven track record of turning ambitious ideas into reality.

Why We Are Your Ideal Hadoop Partner:

- Mobile Integration Experts: Many businesses want to bring the power of their data to their users’ fingertips through a mobile app. However, integrating a data-intensive backend like Hadoop with a mobile client presents unique challenges. For instance, running data-heavy operations directly can be a significant drain on a phone’s battery life. Our expertise lies in designing efficient architectures that solve this. We build lean APIs and intelligent data synchronization strategies that minimize the load on the mobile device, ensuring a smooth, responsive user experience without compromising battery performance.

- AI-Enabled Solutions: Hadoop is often a key component in a larger data science or AI development pipeline. We are experts in building AI-powered applications, from custom machine learning models to advanced analytics, and we know how to use Hadoop as the foundational data platform to fuel these intelligent features.

- Full-Lifecycle Partnership: We are more than just developers; we are strategic partners. Our process covers every step of the journey:

- Validate: We help you turn your idea into a tangible MVP to test the market.

- Build: We handle the entire design, build, and launch process.

- Grow: We use analytics and A/B testing to optimize user acquisition and retention.

- Monetize: We help you implement the most effective strategies to turn your app into a revenue-generating asset.

- Evolve: We ensure your application scales and evolves with your business needs.

2. Dropbox

Dropbox is widely recognized as a major player in the cloud storage and file synchronization space. It is listed among the top Hadoop companies, and its technology stack includes application development, indicating its deep experience in building and maintaining large-scale, data-intensive systems that serve millions of users.

3. Vail Systems, Inc.

Vail Systems, Inc. is another company identified as a top Hadoop user. Their technology stack also includes app development, suggesting they leverage Hadoop to power their services and have the in-house capability to build applications on top of this complex framework, likely in the telecommunications and enterprise communication space.

Conclusion

The journey of Hadoop app development is one of immense potential and significant challenge. The framework’s architecture, built on HDFS, YARN, and processing models like MapReduce, offers an incredibly powerful solution for managing and analyzing Big Data. However, as we’ve explored, the technical complexity, resource requirements, security considerations, and scarcity of talent make in-house development a perilous undertaking for most organizations. The path is littered with potential pitfalls that can lead to spiraling costs and failed projects.

Partnering with a seasoned expert is not just an alternative; it’s a strategic advantage. A firm like MetaCTO brings not only the deep technical knowledge of Hadoop’s inner workings but also the crucial expertise in product strategy, user experience design, and mobile integration. We bridge the gap between raw data processing power and a polished, valuable product that users will love. We handle the architectural complexity so you can focus on your business goals.

If you are ready to unlock the value hidden within your data and transform it into a powerful application, the next step is to talk to a team that has done it before. Talk with a Hadoop expert at MetaCTO to discuss how we can integrate this powerful technology into your product and help you build, launch, and grow a successful application from day one.